Using GPT-3 and OpenAI Codex to convert natural language to bash

4 October 2021

OpenAI recently released OpenAI Codex, a language prediction model based on GPT-3. I got my hands on the Codex API, which allows developers to build things on top of Codex and have been playing around with it the last few weeks. Since I use the terminal every day but always forget CLI commands, I created x a CLI tool to convert natural language to bash commands. This post is about what I learned and how x works.

Want to see the code or install the CLI tool? Check out the project on Github!

Parts:

- What is GPT-3 and OpenAI Codex?

- Converting natural language to bash

- Challenges with prompt engineering

What is GPT-3 and OpenAI Codex?

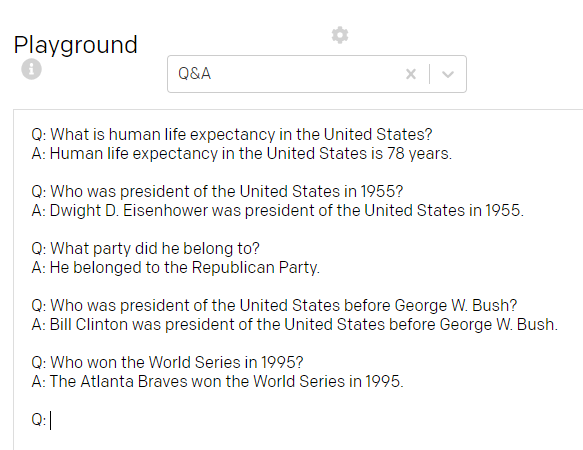

GPT-3 is a deep learning-based language prediction model. Basically, you give the model some text, and it will generate more text based on the initial snippet. For example, you can ask it a question, and it will give you an answer.

OpenAI Codex is a decendant of GPT-3 and is specialized for use in programming applications. One existing use case for Codex is Github's Copilot, a tool to autocomplete code. For example, the code below is generated based only on the comments above.

// generate a random number between 5 and 10

const randomNumber = Math.floor(Math.random() * (10 - 5 + 1)) + 5;

// regex to match "12rem", "5px"

const regex = /^([0-9]+(\.[0-9]+)?)(rem|px)$/;

// get the price of btc in usd

const price = await fetch("https://api.coindesk.com/v1/bpi/currentprice.json")

.then(res => res.json())

.then(data => data.bpi.USD.rate);

Natural language to bash

OpenAI has exposed the Codex model through their API, which is what I've been playing around with. To use the API you give Codex a so called prompt, a short text snippet that it will use to generate more text.

The trickiest part is figuring out what prompt to use. This process of developing prompts, so called prompt engineering, is an art in itself. To do that well you need to understand what the GPT-3 model knows about the world and how to get the model to generate useful results. This is a bigger topic and there are many blog posts about it.

The trickiest part is figuring out what prompt to use. This process of developing prompts, so called prompt engineering, is an art in itself

Coming up with the right prompt was a manual and subjective process for me. I tried multiple prompts and tested how well they translated various natural language commands to useful bash commands. I found the following prompt to work the best:

# Bash

# <insert natural language to convert>

On this front, GPT-3/Codex is a black box. Why does one prompt work better than another? Only the neural net knows… Here are some prompts I tried:

# Bash

# Bash command

# Shell

#!/bin/bash

Challenges with prompt engineering

The brief prompts sent to the Codex API cause some problems:

1. What if the user isn't satisfied with the bash command Codex suggests?

We have very limited control over what Codex outputs. If the user isn't satisfied, we want to come up with a new suggestion. There are two ways of doing that:

- Using the

temperatureoption in the API, which controls how much randomness is in the generated output text - Send a different prompt to the API and hope that Codex outputs something better

For more creative text generation, temperature is an important parameter to tweak to get good results. However, for a tool like x a developer probably doesn't want randomness to play a role in what suggestions she gets, which is why I opted for the latter approach.

When someone wants a new suggestion, we send a different prompt to the API based on the initial prompt. For example:

# Bash

# fetch btc price

curl -s https://api.coindesk.com/v1/bpi/currentprice.json | jq '.bpi.USD.rate'

# Same command, but formatted differently

Suggestions get increasingly worse, but sometimes Codex comes up with something that's actually better.

2. How does x know what OS I'm running and what programs are available?

It doens't. If you're running Ubuntu and ask

x open stackoverflow.com on chromium

it will suggest

chromium-browser https://stackoverflow.com

even though it should be

chromium https://stackoverflow

Or if you're on Windows and ask

x send request to google.com

it uses curl instead of PowerShell's Invoke-WebRequestClient.

To improve this, we need better prompts. For example, we should probably use slightly different prompts based on the OS to make suggestions more tailored. Again, this takes us back to prompt engineering and is a topic for another time.

The future of interfaces

It seems obvious that models like GPT-3 will become prevalent in the coming years. Language is a human's main medium for exchanging information. Until now, we've had to translate our thoughts to text that computers can understand. But increasingly, computers will be able to map natural language to computer code. Codex and x is one step closer to that. Instead of having to look up "how to undo last git commit" on Google or Stack Overflow, I can simply get the code directly.

If you want to try x or see the code, check out project on Github!

Also, I'd suggest you join the OpenAI API waitlist to get access to Codex!